"A meticulous virtual copy of the human brain would enable basic research on brain cells and circuits or computer-based drug trials" Henry Markram

What is the fastest computer in the world? What a difficult question! Colossal progress in technology constantly presents novel solutions in data operations. However, the performance of these supercomputers is still far from that of our own brains.

Despite its small size and jelly-like structure, it has much more to offer than we can even imagine. It solves several complex tasks faster than a blink of an eye, storing a tremendous amount of data.

The brain has fascinated humanity for centuries. Today, biological neural networks inspire the creation of artificial neural networks for superfast operations. Thanks to the brain, we are a step closer to a new generation of supercomputers.

The structure of the brain

The brain looks relatively inconspicuous, doesn’t it? It can be described as a jelly-like sponge that produces electricity. It weighs less than 1.5 kg and consists mainly of water. It hides mysteries that humans have been trying to solve for a long time. It is the command center that decides who we are. It receives and processes information from the external environment and within our body. So far, we do not know a more complex data processing unit related to external stimuli.

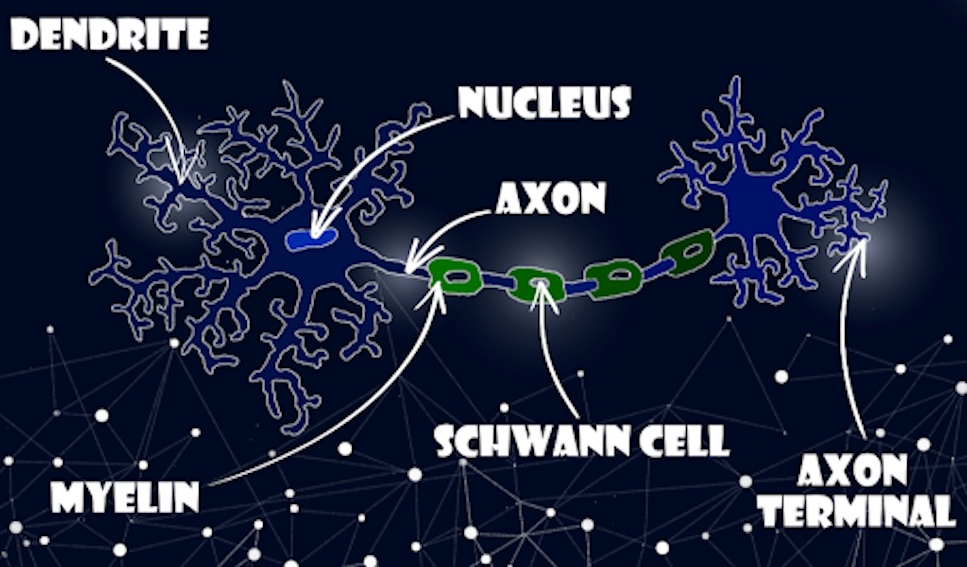

When the brain receives an electrical signal, it flows through the axons in the neurons like electricity runs through cables, or water runs through pipes.

Axons can be one meter long! Each of them is insulated within a protective peel called Schwann cells that improves the flow rate of impulses. Axons have multiple branches that connect with other neurons’ dendrites enabling information transfer. Even if we sleep, a brain receives various data and makes complex calculations for the whole time.

Information flow

Neurons communicate with each other through the synapses, gaps at the end of the neurons, where electrical or chemical signals are transmitted. Chemical signals are molecules like serotonin, dopamine, and adrenaline called neurotransmitters.

Throughout these two types of signals, the brain sends instructions through the nerve cells to muscles in the body for processes from hormone and blood pressure regulation to breathing or sleeping.

All take place in the brain. Biological neural networks are responsible for memory, cognitive functions, intelligence, the ability of logical thinking, and emotions. The neural network makes our brain a unique and complex data processing unit making multiple operations just within two types of impulses.

What if we try to mimic these neural connections and create an artificial copy of them? It is happening now worldwide. The signals taking place in our brain presented as sequences of electrical impulses (so-called action potentials) can be easily described as binary as 0 and 1. So, all processes taking place in the brain can be presented by numbers.

Mapping the brain

Artificial neural networks can be divided into groups depending on the number and arrangement of neurons, learning methods, and the direction of information flow. Within the learning methods, we have supervised (the learning method striving for a specific result), and unsupervised networks (the learning method in which the aim is to detect sudden changes and previously unknown dependencies).

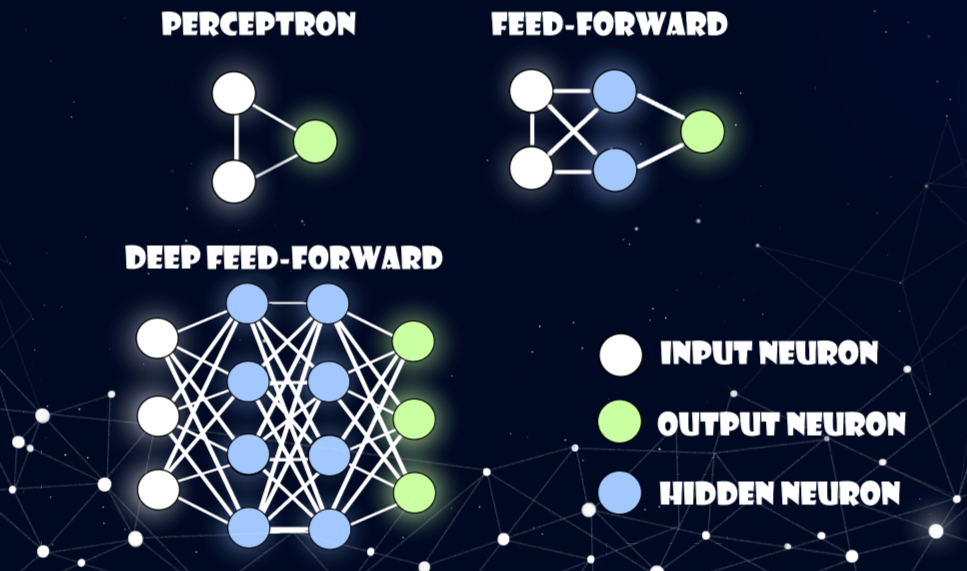

The most straightforward artificial neural network is a perceptron (a single neuron). Compared to the number of neural connections in the brain (even up to 200.000!), the perceptron is quite primitive. Although artificial neural networks are inspired by biological processes, mapping the brain connections is still an uphill struggle. Therefore, scientists create artificial neural networks starting from single units.

In a perceptron, the signal enters and leaves neurons without establishing communication. Perceptrons or neurons are accommodated in layers to generate complex networks. The first and last layers are connected through intermediate layers, called hidden layers, that mediate the signal’s transmission between themselves. Input neurons, instead of making final decisions, analyze the details of the input signal and the subsequent layers deepen this analysis. In other words, the signal goes from the input neuron to the output neuron through the hidden neurons, where the hidden neurons (hidden layer) are responsible for all mathematical operations (calculations). Finally, the output one makes the decision to put forward the signal.

Neurons are connected to each other in many ways. Depending on the connection, we can distinguish several types of artificial neural networks. For example: in a multilayer perceptron network (Fig. 2), information flows only in one direction from the input layer, through hidden layers (if any), and to the output layer. Such a type of neural network can perform a small number of operations. To increase its capabilities, more hidden layers of neurons must be added.

Scientists have to reach for brain-inspired networks for artificial neural networks to get closer to the brain’s structure. In the brain, not only the signal runs in one direction from one “input neuron” to an “output neuron”, but it traverses the entire biological network. In this process, feedback in the signals appears, which scientists interpret as mechanisms for training the network.

Artificial neural networks are constantly developed to improve complex operations and mimic processes in our brains. Still, there are many mysteries to solve, like discovering the nature of neural coding. We try to understand the tremendous data processing efficiency of our brains and the mechanisms regulating their work. Hopefully, sooner or later, we will find an explanation. For sure, mathematical models like artificial neural networks will be a key point in solving such mysteries.

This article is a joint work of Agnieszka Pregowska (Institute of Fundamental Technology Research, Polish Academy of Sciences) and Magdalena Osial (Faculty of Chemistry, University of Warsaw) as a Science Embassy Project. Image Credit Magdalena Osial.

References

- van Hemmen J. L., Sejnowski T., (2006). 23 Problems in Systems Neurosciences, Oxford University Press.

- Gerstner W., Kistler M., Naud R., Paninski L., (2014). Neuronal Dynamics: From Single Neurons to Networks and Models of Cognition, Cambridge.

- Gerstner, W.; Sprekeler, H.; Deco, G. (2012). Theory and Simulation in Neuroscience. Science 338(6103), 60–65. DOI. 10.1126/science.1227356.

- Kunkel , S.; Potjas , T.C.; Epper, J.M.; Ekkehard Plesser, H.; Morrison, A.; Diesmann, M. (2012). Meeting the memory challenges of brain-scale network simulation. Frontiers in Neuroinformatics 5(35), 1 325–331. DOI. 10.3389/fninf.2011.00035.

- McCulloch, W.; Pitts, W. (1943). A logical calculus of the ideas immanent in nervous activity. Bulletin of Mathematical Biophysics 5, 115–133.

- Haslinger, R.; Klinkner, K. L.; Shalizi, C. R. (2010). The computational structure of spike trains. Neural Computation 22 (1),121–157. DOI: 10.1162/neco.2009.12-07-678