A few weeks ago, I was at the airport of Milan flying out to Amsterdam. Unfortunately, even though I was on time, I started worrying about missing my flight because the queue at the security check was so long.

As I was in line, I wondered why the managers of the airport did not send some personnel to help those of us who had an imminent flight or just open some more security check booths to speed up the process. I also wondered why they did not predict such bottleneck way in advance. After all, it should be enough to recognize that the rate at which people were arriving at the end of the queue was higher than the rate at which people were leaving the head of the queue. This can only mean a queue is forming and it is growing over time.

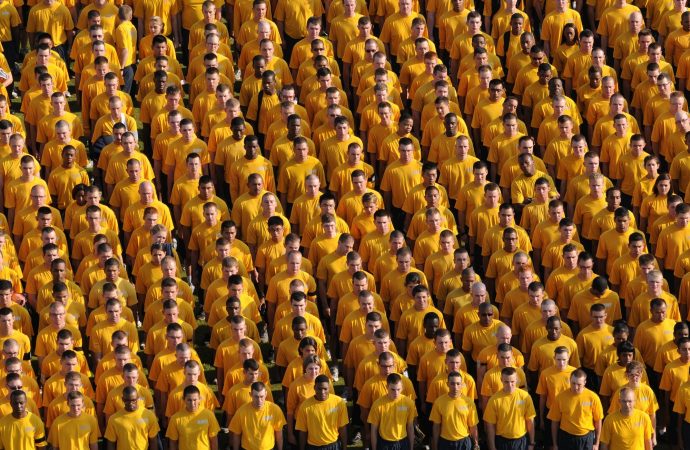

Collective behavior

Many collective behaviors are characterized, among other things, by a strong spatiotemporal nature. The behavior of the individuals is influenced by the environment in which they are embedded, which includes other individuals. Typically, objects nearby tend to influence behavior more than objects far away. In the case of a queue, we tend to enter the queue behind the person who arrived before us, and our turn to leave the queue comes when we have nobody in front of us. In the case of pedestrian lanes on a sidewalk, we tend to stay close to the people close to us walking in our same direction, and we stay away from the people passing by walking in the other direction to avoid collisions.

Besides, it is not just about low-level “crowd dynamics” like queues and lanes, but this is also true for higher-level forms of social behavior: we also stay face-to-face with the people we mingle with and share offices with the people we work with. And it is true also for relationships between people and objects: we face the appliances we interact with and we keep close the objects we care about and need to grab often. In other words, it is important to measure the context in which a person is immersed to understand her behavior. To this end, we need to identify who and what is in somebody’s proximity, and how these change over time. But how?

Proximity sensors

As it turns out, most of the people have in their pocket a powerful computer with plenty of sensors: a smartphone. Nowadays, smartphones come with all sorts of sensors, and one of them is a proximity sensor, often implemented through a Bluetooth radio. Proximity sensors enable phones to detect phones (or other devices with a Bluetooth radio, like a beacon) within a distance of few meters. We are starting to install such sensors also in our appliances and in general in our cities.

The way they work is very simple. Periodically, devices broadcast their unique identifier over the air to a controlled distance range (for example, 3 meters), and the devices that receive such broadcast can infer that they are within a distance from that device.

Why use proximity sensors?

Proximity information is less rich than the coordinates on the planet of each individual, such as those provided by GPS. So, why would one choose to collect less information? Because proximity is easier to measure, as it does not depend on complex infrastructure (like satellites in orbit). Not all aspects of collective and crowd behavior can be captured with solely proximity, but for those aspects and behaviors that do allow for it, proximity is a means to measure behavior at a larger scale because of its superior simplicity. Luckily, queues, pedestrian lanes, community, and co-worker relationships can be analyzed through proximity information, and the possibilities are countless and yet to be fully explored.

Because of their simplicity, companies like Google, Apple, and Facebook are investing in platforms based on proximity data, for example, through so-called beacons. Beacons are small and inexpensive devices provided with a proximity sensor based on Bluetooth Low Energy, that we can install at places like a shop, the hall of a train station, or a bus stop. They allow our phones to pinpoint where they are, hence enabling location-aware computing.

Proximity information also allows the owners of the beacons, like the shop owner or the manager of the train station, to gather a picture of how individuals behave and move inside of their space. With customer proximity data, practitioners can compute all sorts of analytics that are not so different from the analyses the same companies run on the traces their users leave behind when they interact with their products and platforms online through a browser. Is product placement effective? Do customers come back? How long do they spend in each department? What is the path used most commonly to reach train platforms throughout the day? When are bus stops crowded during the day?

The challenges down the proximity road

When one decides to go down the road of proximity-based spatial information, there are a number of challenges that need to be tackled. First, when sensors are deployed in the wild, all things that can go wrong will eventually go wrong. Collecting reliable proximity measurements requires a robust data-collection infrastructure that is resilient to all sorts of conditions in the environment, which includes crowd density, weather, power outages, device failure, etc.

Second, one needs to represent proximity data with a model that supports data analysis. One effective way of representing proximity data is through so-called proximity graphs. These graphs represent the relationships between two entities that were within a certain distance.

Third, once the data is collected and represented through proximity graphs, one needs to identify the right data mining algorithm to recognize, quantify, and qualify the behavior of interest. Graphs are well studied in Computer Science literature, and there are a number of algorithms that can be used or adapted when it comes to understanding behavior in proximity graphs.

The EWiDS project

During the EWiDS project, a project that brought together researchers from, among others, the Vrije Universiteit Amsterdam and the Delft University of Technology, we have developed a number of technologies to collect and analyze proximity information in the wild, in a number of interesting scenarios.

Crowd behavior in museums

At the CoBrA Museum of Modern Art in Amsterdam, we have deployed a number of proximity sensors to be either installed at artworks or worn by the visitors of the museum and used them to measure how long volunteering visitors spent in front of each artwork and in which order. Subsequently, we have used the data collected to inform the museum staff of the behavior of their visitors, in particular, how visitors distributed their time across paintings and rooms.

We have also clustered visitor data to identify group behavior. We were able to discover that around 10% of the visitors actually went through the exhibition from the end to start, perhaps confused by the signs. In addition, the majority of the visitors tended to walk along the perimeter of the museum, giving less attention to the more internal walls (see Figure 1). We were also able to show that there were groups of individuals who distributed their time in a similar way across paintings, perhaps due to similar taste, and these visitors did not come to the museum together. We leveraged this aspect to predict how much time visitors would spend at an artwork, looking at her past behavior.

A similar study was conducted by the Van Gogh Museum, but, instead of sensors, they used human observers that tracked the movements of a number of individuals. They obtained similar data to the one we obtained at CoBrA (but because it was based on human observers, it was a one-shot study), and they used it to rearrange part of their exhibition, showing indeed the validity of the approach.

We repeated a similar experiment at the NEMO Science Museum in Amsterdam. There, we wanted to test whether our monitoring technique could operate in the conditions when it was most needed (and when existing technologies fail): in a complex and crowded building. We ran a similar experiment during the days before Christmas when the NEMO hosts some of the largest crowds.

The NEMO building is particularly challenging for sensing technologies, as it is a big multi-storey open space, full of kids running around. Another interesting aspect of NEMO is that it has some periodic events that attract the attention of large portions of the visitors, potentially creating flows of people across floors, and high densities around the area where the event takes place.

Figure 2 shows how the number of people approaching floor 1, where the “Chain Reaction” event takes place, increases right before the event takes place at 11:15 AM, 12:15 PM, 2:45 PM, 3:15 PM and 4:45 PM and the number of people leaving the floor spikes right after the event finishes 15 minutes later, as they leave the location. This information gives quantitative data for provisioning enough space for the visitors at the event location.

Finally, not all aspects of crowd behavior are purely spatiotemporal and hence measurable with proximity sensors. One can think of the more internal experience of a visitor, such as the mood of the visitors, for instance, of being of great interest as well. For this reason, together with Holland Dance, an organization that promotes dancing in The Netherlands, we have instrumented the audience of a live dance performance with both proximity sensors and accelerometers (both present in nowadays smartphones), and measured their behavior before, during, and after the event. With the data, we were able to predict audience responses to a questionnaire about their enjoyment that we asked them to fill-in after the dance performance.

In a nutshell

To conclude, sensors are opening a window into the digital world over our life in the real world. The number of sensors and devices we are wearing and installing in our homes is growing and it is just the beginning. The next generation of appliances and things are expected to be able to collect, share, and analyze a large volume of data about our behavior which we can put to great use to increase our safety and comfort.

A number of challenges lay ahead, many of them involving guaranteeing privacy, but also the fusion of multiple sources of data, energy-efficiency, new behavior prediction models, as well as devising models that allow us to compute how to intervene to steer the behavior towards desirable outcomes. Yet, these are only the first exciting steps towards building the world that fits our needs, and with the uproaring of Machine Learning, which can only take advantage of this massive volume of sensors data, we can only imagine the applications we will come up with in the coming future.

Read the research article here.

[su_box title=”Share your Science” style=”glass” title_color=”#ffffff”] The Share your Science section aims at giving students and researchers a space to share their thesis to a broader audience. UA Magazine wants to increase the visibility of recent academic work and serve as a bridge between universities and societies. [/su_box]